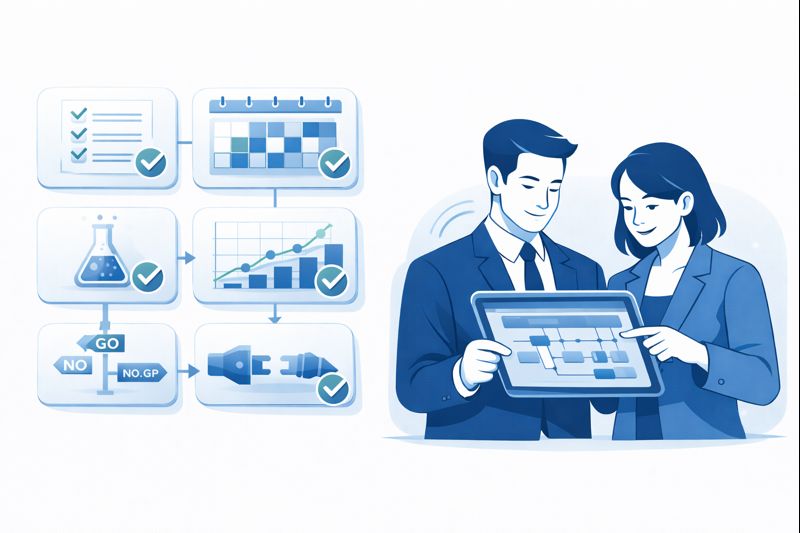

Most “proof-of-concepts” drag on for months, run in synthetic environments, and prove almost nothing about how a platform will behave on your real queues, with your real agents, on your worst days. A real PoC is different: it runs against production-like traffic, validates uptime, routing, CTI, AI and reporting, and gives you enough evidence to sign (or walk away) with confidence. This 14-day checklist shows you how to design, execute and score a PoC that stress-tests a contact center stack instead of simply repeating the polished demo.

1. What a “Real” PoC Proves (And Why Most Pilots Fail)

A real PoC proves three things: that the platform works with your traffic, your stack and your people. That means you see calls and messages flowing from real numbers, into your core CRM, routed by realistic rules, handled by actual agents and supervisors. It is the live continuation of the questions you ask in a modern RFP and the scenarios you test in a demo scorecard, but now with your own data and KPIs on the line.

Most PoCs fail because they are too abstract. Vendors spin up generic tenants, run fake calls with unrealistic scripts, and avoid touching complex pieces like infrastructure, integrations or AI QA coverage. After 30–60 days, everyone is tired, budget cycles have moved on, and the “pilot” ends with a decision made mostly on slides again. A disciplined 14-day PoC forces focus: just enough scope to prove fit, just enough time pressure to avoid drift.

| Step | What You Must Do | Primary Owner | Day Window | Success Evidence | Helpful Deep-Dive |

|---|---|---|---|---|---|

| 1 | Define PoC goals in one page: channels, regions, seats, KPIs, risks you want to de-risk. | CX Lead + CIO | Day 1 | Everyone agrees on 3–5 measurable success criteria (FCR, AHT, SLA, NPS, CSAT, CES). | CX metrics playbook |

| 2 | Select 2–3 real use cases: e.g., WISMO, card block, appointment reschedule, password reset. | Operations | Day 1 | Each use case has volume estimates, scripts, outcomes and KPIs defined. | Top 50 use cases |

| 3 | Choose test numbers and regions (DIDs, toll-free, GCC, etc.) and map to PoC tenant. | Telephony / Network | Days 1–2 | Inbound calls reach the PoC platform reliably from target markets. | Uptime architectures |

| 4 | Wire CTI to one CRM (Salesforce, HubSpot, Zendesk) in a scoped sandbox or pilot org. | Systems / CRM Owner | Days 1–3 | Screen pops, logging, recordings and dispositions appear where they should. | CRM + CTI checklist |

| 5 | Implement basic ACD: skills, priorities and business hours for test queues. | Contact Center Architect | Days 2–3 | Calls for each use case hit the correct queues with predictable routing patterns. | ACD explained |

| 6 | Configure IVR / self-service flows for the chosen use cases (press 1, voice, or WhatsApp). | CX + Telephony | Days 2–4 | Customers can self-serve simple tasks or reach agents with context preserved. | AI IVR & voicebot stack |

| 7 | Add 8–20 pilot agents and supervisors, including WFH and low-bandwidth scenarios. | WFM + Team Leads | Days 3–4 | Agents log in from real devices; headsets and networks are validated. | Device & headset guide |

| 8 | Enable AI agent assist and configure at least one QA scorecard for each test queue. | QA Lead | Days 4–5 | Real calls generate AI prompts and auto-scored QA entries aligned with behaviours. | QA templates & AI scoring |

| 9 | Turn on full-coverage call recording with secure storage and retention aligned to policy. | Compliance | Days 4–5 | Recording indicators, consent flows and access controls all behave as designed. | Recording compliance guide |

| 10 | Configure at least one outbound list and dialer mode with TCPA-safe rules. | Sales / Collections Ops | Days 5–6 | Dialer runs without hitting blocked times, DNC or consent issues; audit trails are clear. | TCPA workflows |

| 11 | Simulate a small incident: degraded network or partial queue spike; observe behaviour. | IT + NOC | Days 6–7 | Alerts fire, dashboards show accurate status, and failover or throttling works. | CIO survival guide |

| 12 | Run 2–3 full PoC days with live traffic, closely monitored by supervisors and analysts. | Supervisors | Days 8–10 | Meaningful volume across all PoC use cases; agents and customers complete journeys. | WFM in cloud centers |

| 13 | Pull reporting for those days: SLAs, AHT, FCR, abandonment, queue and agent views. | Analytics | Days 10–11 | Numbers match expectations and are easy to extract, slice and share with leadership. | COO analytics dashboards |

| 14 | Estimate cost based on PoC usage: seats, minutes, storage, AI units and add-ons. | Finance + Procurement | Days 11–12 | A draft invoice can be generated; no surprises on AI or storage sprawl. | Pricing breakdown |

| 15 | Score the PoC against your weighted criteria and document key trade-offs. | Project Steering Group | Day 13 | Scorecard shows clear pass/fail on goals; red/yellow items have mitigation plans. | Use-case-first buyer framework |

| 16 | Decide on next step: expand, iterate, or stop—and communicate rationale. | Exec Sponsor | Day 14 | Go / no-go decision recorded with evidence instead of intuition. | 12-month integration roadmap |

2. Designing a 14-Day PoC That Actually Fits in 14 Days

The only way to keep a PoC to 14 days is to narrow the scope ruthlessly. That means saying “no” to testing every queue, every country and every exotic channel. Instead, pick the smallest set of journeys that represent your hardest realities: high-value customers, strict compliance, complex routing or painful legacy constraints. This is the same trade-off you make when applying a use-case-first buyer framework: depth on what matters beats shallow breadth.

Timeboxing also means pre-work. Before Day 1, get procurement, legal and security aligned on what they need to sign off a pilot tenant, data sharing and number configuration. Re-use artefacts from your RFP templates, SLA models and SLA expectations so you are not inventing checklists from scratch under time pressure.

3. Technical PoC Checklist: Routing, CTI, AI and Uptime

Technical fit is the backbone of the pilot. Start by validating routing and ACD: queues, skills, priorities, overflow rules and business hours for your highest-value use cases. Use patterns from ACD guides and multi-region architectures from zero-lag call system articles to simulate realistic load. You want to see how the platform behaves when multiple skills, languages and VIP segments are in play, not just default routing.

Next, push CTI hard. A 14-day PoC should prove how well the platform integrates with your primary CRM, whether that’s Salesforce, HubSpot or Zendesk. Test screen pops, click-to-dial, auto-logging, and how recordings and dispositions are attached. Cross-reference this with your broader integration planning from the 12-month integration roadmap and the broader integration catalogue. If basic CTI is clunky, full AI stack integration will only be harder.

4. Operational PoC Checklist: Use Cases, Agents and Metrics

Operational success is about whether agents and supervisors can actually run a day of work inside the pilot stack without constant friction. Start by scripting realistic calls for each use case: not just “happy path” flows, but the messy reality your agents handle now. Combine this with targeted routing and channel patterns from assets like the WhatsApp + voice playbook and omnichannel guides so you are testing real workflows, not isolated features.

Then, define metric targets before the pilot starts. For each PoC day, track SLAs, abandonment, FCR, AHT, CSAT, CES and promoter / detractor patterns. Use the same definitions from efficiency metric benchmarks so you’re not debating formulas mid-pilot. Your goal is not to beat mature production numbers immediately; it is to see whether the new stack can match or improve critical KPIs under controlled conditions with limited tuning.

5. Risk, Compliance and High-Risk Flow Checklist

No PoC is complete if it ignores risk and compliance. At minimum, your 14-day pilot should test recording consent, storage, access and retention rules; DNC and consent logic for outbound; and behaviour in regulated journeys like fraud, payments and healthcare or banking interactions. Use specialist content such as recording compliance overviews, TCPA workflow guides and fraud & KYC journey patterns to design your risk scenarios.

For verticals like healthcare, banking and e-commerce, layered pilots may be required. For example, you might run a focused HIPAA scheduling trial using playbooks from healthcare contact center articles, or a KYC-heavy PoC for fintech firms using models from banking & fintech guides. The point is to validate that the platform can sustain your riskiest flows—not just generic billing or support chats.

6. 14-Day PoC Execution Plan (Day 1–14)

Days 1–3: Scope, numbers, CTI. Finalise the one-page PoC goals, pick 2–3 core use cases, secure pilot numbers and wire CTI to your primary CRM. Make sure legal and security have signed off on data handling and that you’ve reused as much as possible from your migration planning. If you can’t pull this alignment together in three days, the PoC will drift.

Days 4–7: Routing, agents, AI and compliance. Implement routing, IVR, voicebots, AI agent assist and QA for your selected flows. Onboard a small but representative agent cohort—including WFH setups—and enable full recording with compliance controls. Run your first low-volume live traffic and fix obvious issues quickly. This is where patterns from real-time coaching tools and 100% coverage QA become real.

Days 8–11: Full PoC days and analytics. Commit to at least two full PoC days where a meaningful percentage of your volume for those use cases runs through the pilot stack. Supervisors should monitor dashboards, wallboards and coaching tools heavily. After each day, pull reports and compare them with your baseline numbers using KPI definitions from analytics playbooks and CX score frameworks.

Days 12–14: Cost, scorecard, decision. Map PoC usage into cost models using pricing breakdowns, cost calculators and TCO comparisons. Then, score the pilot using your predefined criteria and decide: expand, iterate or stop. If you stop, capture why—so the next PoC can be more targeted instead of throwing everything away.

7. When to Call the PoC a Success (Or a Useful Failure)

A PoC is successful when it answers the right questions, not when it proves a vendor “perfect.” The most important outcome is clarity: are you comfortable building your 12-month roadmap on this stack, from CTI to full AI, or should you continue evaluating alternatives like AI-powered alternatives, Five9 replacements, or Aircall alternatives?

A “useful failure” is almost as valuable. If a PoC reveals brittle integration, poor uptime, weak GCC routing or painful admin tooling, you’ve just saved yourself a year of replatforming pain. Capture that learning and feed it back into your buyer framework, RFPs and comparison matrices. The next pilot will be sharper, and your internal stakeholders will trust the process more, because they’ve seen it surface reality instead of confirmation bias.